LegalNeer

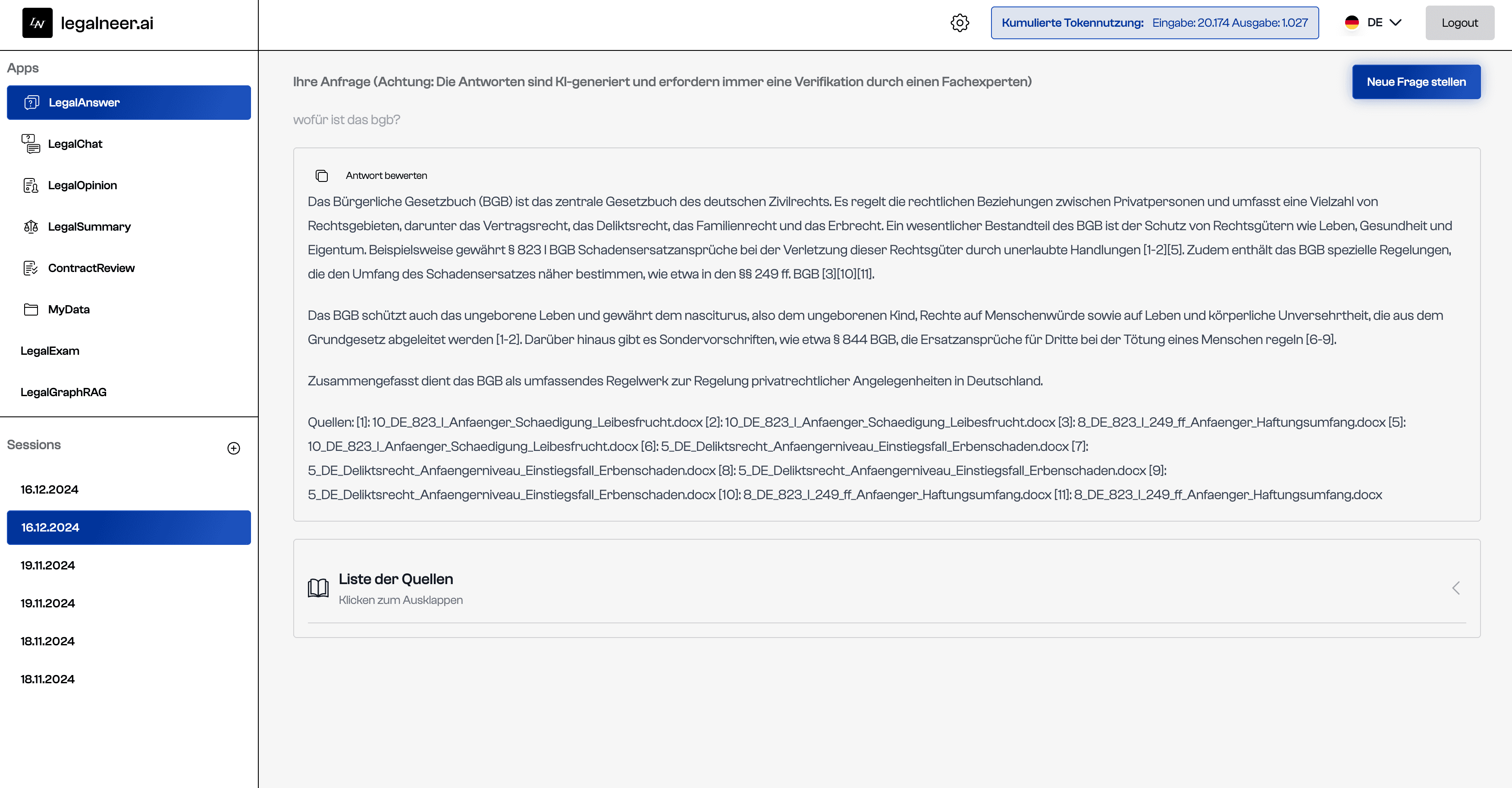

AI-powered legal analysis SaaS with real-time streaming, document knowledge bases, and consumption-based billing.

The Challenge

Legal professionals spend significant time reviewing, summarizing, and analyzing documents — contracts, legal opinions, case files. Existing tools offer generic AI chat interfaces that lack domain-specific context and structured legal workflows. The goal was to build a platform that provides multiple specialized AI applications (summarization, Q&A, chat, opinion drafting, contract review) — each with access to the user's own document knowledge base, real-time streamed output, and granular usage tracking for billing.

The Approach

The architecture separates concerns across three services: a Next.js 14 frontend for the UI, a Django backend handling user management, file storage, authentication, and billing, and a FastAPI backend orchestrating LangChain-based AI chains. Communication between frontend and FastAPI uses a custom Server-Sent Events streaming pipeline — built from scratch using eventsource-parser on the client and sse_starlette on the server — enabling token-by-token output delivery. Authentication relies on RSA256-signed JWTs, issued by Django and verified independently by FastAPI, avoiding inter-service coupling. All services are containerized with Docker multi-stage builds and orchestrated via Docker Compose.

Implementation

AI Chain Architecture: Five distinct AI applications are implemented as LangChain Runnables: Legal Summary, Legal Q&A, Legal Chat, Legal Opinion, and Contract Review. Each chain combines prompt templates (stored in a central config.yml), document retrieval via Pinecone vector search (with configurable similarity/MMR strategies and Cohere reranking), and OpenAI model execution. Contract Review uses dynamic routing — a classification step determines the contract type (e.g., NDA, service agreement) and dispatches to a type-specific review chain. Legal Opinion includes chain-of-thought reasoning with structured output formatting.

Streaming Pipeline: The frontend's /api/llm/[path]/route.ts acts as a streaming proxy. It instantiates a RemoteRunnable from LangChain, streams chunks from FastAPI, and forwards them to the browser via a TransformStream. Each chunk is JSON-encoded as an SSE data: event. The use-chat hook manages message state, abort controllers, and progressive rendering on the client side.

Knowledge Base System: Users organize documents in hierarchical directories (implemented with Django MPTT). Files are uploaded to S3-compatible storage (Exoscale), chunked into 2000-token segments with 200-token overlap, and indexed in Pinecone with metadata (document type, legal field, timeframe). A FileKnowledgebaseState model tracks per-user inclusion flags, and a global knowledge base provides shared legal reference material. A recent overhaul restructured the entire knowledge base display and management system.

Billing & Token Tracking: A custom TokenUsageCallbackHandler (LangChain callback) captures input/output token counts during chain execution and POSTs them to Django. Django records usage per session and triggers Stripe meter events for consumption-based billing. Subscription tiers (Free, Basic, Pro) gate access to specific AI applications via a PermissionChecker dependency in FastAPI.

Auth & Multi-language: JWT tokens use RSA256 with a private key in Django and base64-distributed public keys in FastAPI and Next.js. Social login (Google, LinkedIn) is supported via django-allauth. The frontend uses next-intl for full German/English internationalization with locale-based routing.

Results

The platform ships five production-ready AI legal tools, each with real-time streaming, document-grounded retrieval, and session persistence. The three-service architecture cleanly separates UI, business logic, and AI orchestration, allowing independent scaling and deployment. The self-built streaming pipeline delivers sub-second first-token latency to the browser. Token-level usage tracking enables precise consumption-based pricing. The codebase supports multiple LLM models (GPT-4o, GPT-4.1, GPT-4-turbo) with configuration-driven switching.

Highlights

- Custom SSE streaming pipeline for LLM output

- LangChain-based multi-chain AI architecture

- Hierarchical document knowledge base system

- Consumption-based billing via Stripe metering

- JWT RSA256 auth across three services

- Internationalized UI (German & English)